I recently had to work with a feed from an entertainment vendor who distributes DVD, music CDs, and such. To the stores that use these files, they provide a rather confusing set of flat, tab delimited files, in a very un-userfriendly format. Since the store I was working for has a predefined format they want vendor files in to work with their search and navigation backend, we needed to transform these files to that format. This is where Kettle comes in.

The first file I need to deal with from them is their Products file. The file contains roughly 28 fields with various numbers that make no sense to me what so ever. The only fields I need to concern myself with are the Product ID, Product Name, Internal ID (used for mapping with the other files), a Category ID, the Artist, Price, and Availability Date. The other fields I can ignore. Since I am dealing with Categories I also need their Category file. Categories are going to be handled in a special way. I don’t need this in my actual file, but in a separate file, or files rather, that will be appended to a separate set of files only once. And the final file I need out of their set is their Attributes file, which will contain information about products such as if the product is Widescreen, Dubbed, Subtitled, etc. These are handled in a special way in the search backend, so I just need to provide them.

Figure 1. Conceptual Model of Data

What I need to do is transform this data into the format in Figure 2.

Figure 2. The Data Feed Format

The Artist field will go into Search Field 1, and the first two attributes I come across will go into search fields 2 and 3. Everything else will be a simple 1 to 1 mapping.

To get started, the first thing I need to do is start Kettle.exe, and create a new transformation. If you are using a repository, great, otherwise, choose No Repository at the startup screen. When you are in Kettle, go to File/New/Transformation.

Figure 3. New Transform

With the new file created, drag over 3 new Text File Inputs, located under the Core Objects/Input section, to the transformation area.

Figure 4. New Text Inputs

With the three text file inputs in the transformation, I need to set them up to read my data files. The first thing is to add the file to the input file list. Do this by clicking on Browse, then when you select your file, click on add. With the file selected, I now need to set up the delimiting information. These files are all tab delimited, with no text qualifiers (meaning no quotes around Strings), and no header row. So I click on the content and set the appropriate options. Since this is a tab delimited file, I need to click on the Insert Tab button to add in the tab.

Figure 5. Delimeter Options

Now I need to select the fields. To do that, since I have my file, all I need to do is click on the Get Fields button under the Fields tab. For developments sake, I will just name the fields that I need, and leave the field names alone for the remaining fields. Also, since I am just using these fields as description fields, I change the Date fields back to Strings.

Figure 6. Field selection

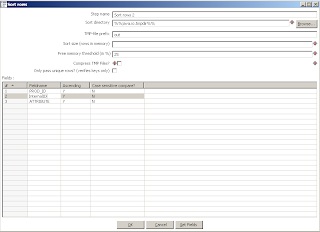

That’s it for Products, I do the same for the Categories and Attributes Tables. Now that the text inputs are set up, I need to do the transformations. The hardest part will be to denormalize and join the attributes into my input stream to feed into my output text file. The first step I need to take care of is sorting my data for the field row delimeter. So, I drag over a Sort Rows object from under the Transformation section. I need to connect the Attribute text data source to the Sort Rows object in order to edit it correctly. In order to do the connection, I need to hold down the Shift key on my keyboard, and drag my mouse from the Attibutes object to the Sort Rows object. This will indicate to the transformation that a “hop” in steps needs to occur between these two objects. Now, I edit my sort to sort based on the Product ID.

Figure 7. Sort Row Options

Next, I drag over a Select Values object and connect the Sort Rows object to it. In the Select/Alter tab, I click on Get Fields and leave everything default. Since I wont be using the non-named fields, I go over to the Remove tab, and select those fields.

Figure 8. Remove Fields

That was the easy part. Now I need to denormalize the data. What I want to do is have the first 3 attributes for each product to show up in consecutive columns. I tried using the Denormlizer here, with no success. So I ended up using the row flattener. The way the row flattener works is you define a single field that will contain the consecutive data. You then define additional columns. The flattener will then copy to each column in the order it receives data. So for example, lets say you have the following data defined in a field in your incoming data stream:

-Wide Screen

-Sub Titled

-Spanish

And in your row flattener, you defined the following target fields:

-FieldOne

-FieldTwo

-FieldThree

The flattener would assign the values like so:

-FieldOne = Wide screen

-FieldTwo = Sub Titles

-FieldThree = Spanish

It also seems that once unique values have been exhausted, it will just finish filling out the columns with the last unique value it encountered. For my purposes this is just fine. I define my field flattener with my values for Attribute Name in the following figure.

Figure 9. Field Flattener

So, with this final step in my transformation, my data stream for attribute will look something like:

-ProductID

-InternalID

-AttributeName

-Search_Field_1

-Search_Field_2

-Search_Field_3

-Search_Field_4

Now, I need to join it to my Products data stream. To do this I pull over a Join object. I need to join on my Internal ID or my Product ID since they both uniquely identify my product. So I will set that as the join. I also need to set this join type to Left Outer Join, since I need Products to show up even if there are no Attributes to join with. I also will set Products as my Primary feed with Attributes as my secondary feed. It is important that when you do the connections in the Transformation editor that you connect both the Products Text Input and the Row Flattener object.

Figure 10. Join

The final thing I need to do to my transformation is modify the Product ID. Since this is for a test feed, I need the product ID to be unique. I will do this with Java Script. I also need to modify the attribute field to remove any pipes in the data field, since my output file needs to be a pipe delimted text file. So, I will drag over a Java Script component, and in Javascript I will write the appropriate code to add in increments of 10 million to the product ID (which I will modify in sequential runs), and use the string replace method to replace any pipes.

Figure 11. Javascript Code.

The final part of this is to output my text file. I need to set my file name for output, delimiting options, and fields I will use in my Output File object.

Figure 12. Output Text Filename

Figure 13. Output Text Delimeter

Figure 14. Output text fields

You will notice in Figure 13 I have Append set. This will append every run of the transformation to the end of the output text file. I also have Price in twice since the Display Price and Actual Price are the same.

Now, the final part of this transformation is the Categories. The only thing I need special for Categories is a hard coded entry for my Super Category mapping in the internal search appliance for my categories in the vendor data feed. I accomplish this through a JavaScript component between the Categories Text Input and the Output files. I won’t show the Output files for Categories since it isn’t relevant to my main transformation with the Attributes and Products. The final figure shows my finished Transformation.

Figure 15. Finished Transformation

Now, I can create my dummy feed and add to it at the click of a button. Of course, this is applicable to actual production data transformations as well. Kettle does run a little slower than a hand coded solution, but not enough to rule it out due to the amount off time it saves in development and the amount of time saved in modifying it when necessary.

5 comments:

Thanks for the great article. I'm a big kettle fan, too, and always like to see how others are using it.

Rock on!

Rick

Hi, It would be nice if you upload the files needed to put in practice this article.

thanks for posting it!!

I think the tool is totally buggy. It has a nice looking interface, but is utterly frustrating to work with, even for the most simple task. Besides that, the performance is dismal

The Data Mapping engine in Data Transformation Server allows any-to-any transformations between different data formats. It includes complex data functions such as string, math, and conditional operations as well as DB and XML file look-up.

thank you so much. god bless you

Post a Comment